This article is intended as a discussion-starter for what is intended to be the main focus of this website during the coming period—exploring the claim that we are living in an exceptionally important time, with decisions taken now likely to have a disproportionate and possibly decisive impact on the long-term prospects for humanity and human civilization.

Over a decade ago the late British philosopher Derek Parfit started a debate about whether the time we inhabit is the hinge of history, a period of disproportionately significant, possibly decisive, importance for the long-term future of humanity, with the implication that there is a particular obligation on the present generation to “get things right”.

Parfit was the most important modern advocate of the ethical theory known as Consequentialism, the doctrine that holds that the right action is that which produces the most beneficial overall consequences. Consequentialism is generally interpreted as a universalist theory in the sense that all people, including generations born in the distant future must, at least in principle, be accorded the same moral significance.

Other philosophers of the Consequentialist school took up the debate with one, William Macaskill of Oxford University, managing to take the argument outside the realms of academia, coining the word longtermism to describe his view. Unusually for a philosopher, he managed to gain significant attention in the mainstream media and influence powerful figures in tech and finance, and wrote a best-selling book elaborating his view, What We Owe the Future: A Million-Year View. Elon Musk, for one, has explicitly endorsed MacAskill’s view. The cryptocurrency fraudster Sam Bankman-Fried was also a fan, even appointing MacAskill as an ethics adviser (less bragging rights about that one!)

Parfit’s main argument for the hinge theory was that we live in an age of transformative technologies that, on the plus side, may provide solutions to some of the world’s most intractable problems, but also create multiple new forms of existential risk, including the possibility of species extinction, at a time when humanity is still confined to a single planet.

This reasoning is termed the time of perils argument and is tied to the growing interest in recent years in the problem of existential risk, which regularly figures in public discourse, most often in the context of climate change. Since the main effects justifying climate change’s status as an existential issue are likely to pan out over the present and subsequent centuries, anyone seriously bothered about it is a longtermist, with respect to this issue at least.

But here is the problem: it turns out there is much more to existential risk than climate change, nuclear war and lethal pandemics, the dangers that get the most current attention. As has been revealed by the work of researchers associated with a number of recently established cross-disciplinary centres devoted to existential risk and human futures, some of the most serious existential risks scarcely figure in public debates.

Toby Ord, a researcher at one such centre, the Oxford-affiliated Future of Humanity Institute, has attempted to identify and quantify the main sources of existential risk humanity could face in the coming century, and set out his conclusions in a book The Precipice: Existential Risk and the Future of Humanity. He estimates the probability of at least one of the risks eventuating at one in six, which is not the highest recent estimate. The British cosmologist and former Astronomer Royal Martin Rees put it at one in two.

As the title of Ord’s book implies, he thinks we are traversing a particularly dangerous period in human history in which our species could pass to sunlit uplands of a future in which technology provides solutions to some of our most pressing problems—diseases, short lifespans, poverty, environmental degradation, and so on, provided that we don’t succumb to one or more of the dangers that he surveys.

Just about all of that posited risk is attributable to anthropogenic (human created) risk factors, rather than purely natural ones. This includes things like synthetic pathogens, out of control molecular nanotechnology, and physics experiments like those at CERN’s Large Hadron Collider creating extreme conditions that, some argued, could destroy the earth.

In regard to the latter, it is worth reflecting that the scientists who developed the atomic bomb in the 1940s were seriously concerned that a fission bomb detonation could trigger a chain of fusion reactions in the atmosphere and oceans that would vaporize the surface of the earth. They estimated the probability of this at slightly under 3 in a million, and decided on this basis it was appropriate to proceed!

In a separate paper, Ord and two colleagues put the upper bound on the risk of human extinction from purely natural causes at 1 in 14,000 in any given year, a rounding error on the overall risk profile.

According to Ord, by far the greatest of the anthropogenic risks is an item that, until very recently, few would have taken all that seriously and that scarcely figured in public debate: unaligned artificial intelligence. This refers to the possibility that a super-intelligent AI, far exceeding the intelligence of not just any specific human, but the collective intelligence of humanity as a whole, were to develop goals incompatible with human values—or even human survival.

In his order-of-magnitude estimates, Ord puts the existential risk of unaligned AI at 1 in 10, compared to which climate change risk seems like small potatoes, estimated at 1 in a 1000. This claim, unsurprisingly, was controversial, drawing some trenchant rebuttals including this one by another philosopher, Émile Torres, that weaves climate change into a broader denunciation of the entire longtermism philosophy.

The issue is partly definitional: what counts as an existential risk? Existence of what? Humanity, life on earth, civilization-as-we-know-it? Russia as Vladimir Putin would like it – an expanding imperial state? Talk of existential risk is nowadays regularly invoked as a trump card in public policy debates, leading to a devaluation of the currency.

Not every catastrophic event is existential, if the term has any meaning. Not even climate change, about which even so prominent a scientific advocate of climate change action as Michael Mann concedes: “there is no evidence of climate change scenarios that would render human beings extinct.” A genuine extinction level risk, the prospect of untold future generations foregone, with all that implies, obviously deserves special attention.

Unaligned AI, according to some of those most closely involved in the development of the field, does pose such a risk. Ord’s one-in-ten estimate of the likelihood of this is confirmed by a survey of AI experts published in August 2022. At the very least, the emergence of a super-intelligent AI hostile to, or indifferent to, human values would result in a complete loss of human agency over the earth, with the implication that the continued existence of human civilization and humanity itself would be at the discretion of such an entity.

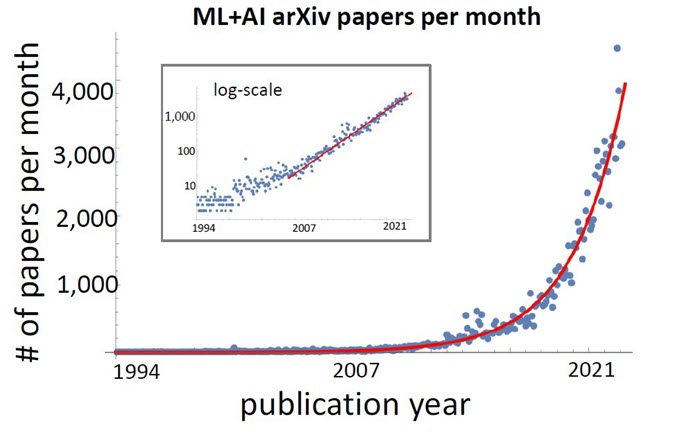

How real, and how imminent, is this danger? These matters are taken up in detail in an article to be posted in September 2023. It is noted here that there is far less complacency about this possibility than was the case even a few years ago, with the extraordinary, indeed exponential, acceleration of progress in AI of the past decade based on the deep learning approach that utilizes huge, multi-layered artificial neural networks with analogies to the structure of the human brain.

A striking feature of deep learning AI is that even the developers of the most successful applications like OpenAI, the company that produced ChatGPT, or the British firm DeepMind that shocked East Asia by producing an AI that beat the world champion in the game Go in 2016, a feat thought well beyond current AI, cannot explain the exact reasoning processes used by the neural nets to produce results, such as a counter-intuitive but successful move in Go.

There is a black box quality to what goes on in the depths of a trillion-parameter neural network of the scale of GPT-4 (the AI model underpinning the latest iteration of ChatGPT), giving rise to what those in the field term the problem of interpretability. This is related to the phenomenon of emergence, the tendency of neural networks to somehow acquire abilities that no-one intended, or anticipated, if the neural networks and the data they are trained on reach sufficient scale. Tristan Harris, a computer scientist at the Center for Humane Technology, was disconcerted that it took two years to discover that the neural net behind an earlier version of the model behind ChatGPT (GPT-3) somehow learned to do quite high-level chemical analysis.

Among other things, this complicates judgements about how close we are to super-intelligent AI (AGI). If even the technologists are unsure what is going in within the depths of large neural networks, and the abilities they might acquire, then AGI might emerge quite suddenly, and unexpectedly, as even the experts most sceptical about recent developments concede. A team of fourteen Microsoft researchers who spent six months evaluating GPT-4 titled their report Sparks of Artificial General Intelligence: Early Experiments with GPT-4, after dropping the even more striking First Contact with an AGI System.

This has led to calls for a pause—or even a halt—in the further development of these powerful systems until it is possible to get a better understanding of where things are up to. This includes some of the foundational figures in the deep learning field, including two of the three winners of the 2018 Turing Prize, the computing world’s equivalent of the Nobel. Geoffrey Hinton, often called the Godfather of AI, is most vocal, revising his earlier view that AGI was decades away. He resigned from Google so he could express his concerns more freely. This is not a unanimous view, by any means—Yann LeCun, the chief scientist at Meta and the third winner of the Turing Prize, is the most prominent sceptic, tweeting “the most common reaction by AI researchers to these prophecies of doom is face palming”.

Nonetheless, in May 2023 a Who’s Who of the key figures currently driving developments in the field, including the developers and scientists behind the most powerful AI technologies, signed the following statement:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

So, if the danger of misaligned AI needs to be a global priority, what kinds of measures can be taken. How to design AI systems that can be guaranteed not to infringe on human interests, if the system were far more intelligent than any human? Who would get to decide what protection of human interests entails?

And even if widespread—indeed global—agreement could be reached about this, what about the problem of emergence mentioned above, the ability of complex AI systems to develop unexpected and unintended properties and capabilities, including goals antithetical to human interests? According to a paper from the Center for AI Safety:

Goals can also undergo qualitative shifts through the emergence of intra-system goals. In the future, agents may break down difficult long-term goals into smaller subgoals. However, breaking down goals can distort the objective, as the true objective may not be the sum of its parts. This distortion can result in misalignment. In more extreme cases, the intra-system goals could be pursued at the expense of the overall goal.

So, if an AI is given an overall goal, to maximize its ability to achieve it, it may adopt as a subgoal the instrumental imperative to gain as much power as possible. The Swedish theorist Joe Carlsmith explores this in detail in a recent paper Existential Risk from Power-Seeking AI (March 2023), where he characterizes the features of a power-seeking AI and argues there will be powerful incentives (commercial, economic, strategic) to deploy such systems and that, once this happens, “the problem scales to the permanent disempowerment of our species.”

Carlsmith believes the likelihood of this occurring by 2070 is “disturbingly high”, greater than ten percent, the same as Ord’s estimate of the existential risk from unaligned AI, as well as the means estimate from the expert survey mentioned above.

It puts a sobering light on the claims, often heard in both mainstream and scholarly media, that we should focus on the immediate effects of AI and stop worrying about what they describe as improbable, science-fiction scenarios. The problem is that, due to the AI acceleration of the past decade, together with rapid progress in related technologies like quantum computing, it now seems these dangers are more probable, and possibly much more imminent, than previously thought. And the danger is first-order existential. As OpenAI CEO Sam Altman put it, if things go bad it could be “lights out for all of us”.

So, what is to be done?

Within the industry, there is wide in principle acknowledgement that there needs to be much more emphasis on researching AI safety, efforts on which are miniscule compared to the frenetic pace of work on AI capabilities. There are legislative efforts to codify a set of rules concerning AI development, the most advanced being the AI Act being developed by the European Parliament, and two bills under consideration by the US Congress, one of which focusses on safeguards and transparency, the other on maintaining the US competitive edge in the field. Then there are the calls for a pause in the testing of powerful AI models mentioned earlier.

So, there is good reason to think we stand on the threshold of developments of immense consequence for the long-term future of our species. We are truly on a precipice—and the decision timeframe may be measured in years, rather than decades, let alone centuries (as envisaged by Parfit in his original statement).

The range of future possibilities that have been projected range from utopia to dystopia, and everything in between. The former includes OpenAI CEO Sam Altman’s vision of a future of abundance, with the cost of information and energy trending toward zero and a global universal basic income removing the scourge of poverty. The latter possibility we see taking shape in Xinjiang in China, with AI powered technologies of surveillance and control making possible digital panopticons more powerful, and more stable, than the fictional dystopias depicted in Orwell’s 1984.

This would be an extremely tough problem, even given the best will in the world by all concerned. How unfortunate, then, that the sudden salience of the AI safety problem has coincided with the worst deterioration of geopolitical tensions since the worst days of the Cold War, due to the emergence of a coalition of autocratic powers with the CCP regime in China, the Putin dictatorship in Russia, and the Shia Islamist regime in Iran. We are in the midst of the first major land war in Europe since WWII and face the real possibility of armed conflict in the Indo-Pacific in the next five years if the CCP regime moves against Taiwan.

With the CCP emerging as a peer competitor to the United States in the main determinants of national power—economic, scientific and technological, and military—this autocratic coalition poses a more serious threat to the global liberal democratic order than even the communist bloc during the Cold War.

Moreover, unlike the Cold War, where the ideological blocs were more or less hermetically separated, in the modern networked world with deep linkages between the autocracies and the democratic powers in trade, investment, supply chains, globalized manufacturing, technology transfer, education and culture, the autocracies can exert influence in the democratic West in ways that would have been inconceivable in earlier eras.

This includes the CCP’s remarkably successful influence operations designed to suborn Western governmental, corporate, media and educational elites in the pursuit of their objectives. This extends even to popular culture, with Hollywood film producers extremely reluctant to produce anything that might offend the CCP and risk losing access to China’s vast market, and universities that shamefully, in some cases, have become effective censors of staff and students who criticize the regime.

The Ukraine war and the Covid pandemic both underscored the risks inherent in these dependencies. Putin ruthlessly, if ultimately unsuccessfully, used Europe’s dependence on Russian oil, gas and coal to pressure the European democracies to abandon, or at least downgrade, their support for Ukraine’s resistance to Russian aggression. The Covid epidemic cast a harsh light on the West’s dependence on China for the supply of Personal Protective Equipment (PPE), the masks, gloves, and protective clothing needed by both health professionals and the general population, as well as a high proportion of rapid antigen test kits.

Furthermore, almost all of the Western democracies have committed to a massive, and highly problematical, energy transition to replace hydrocarbon fuels with renewables, predominantly wind, solar, and hydro where available, combined with battery storage, a task made even more difficult by the ongoing aversion nuclear energy in some nations, with Australia’s uniquely stupid ban on even consideration of nuclear power the most extreme example. There are growing questions as to whether an energy transition that relies overwhelmingly on the existing generation of renewables, predominantly wind and solar, is really capable of achieving the deep carbonization that those concerned about climate change advocate, especially if nuclear is precluded.

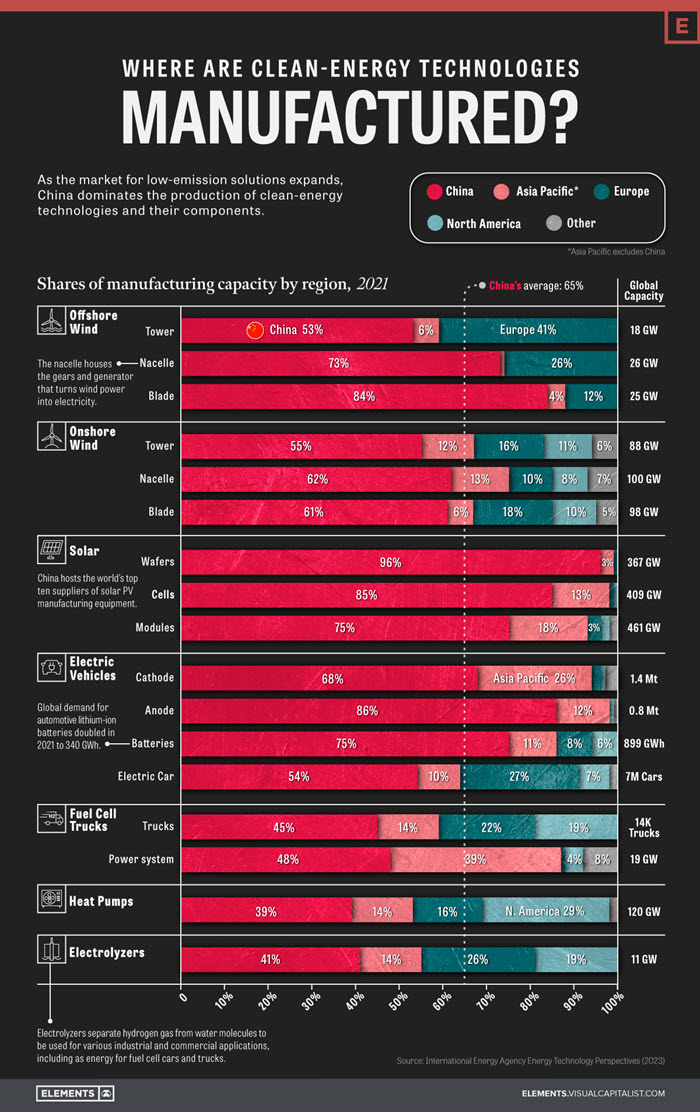

A further point, of great significance to the geopolitical contest between the democracies and the autocratic alliance, is that the CCP has managed to achieve an overwhelmingly dominant position in the supply of the key minerals and manufactures necessary to the wind/solar energy transition, resulting in strong supply-chain dependencies for nations that have opted to go down this route. Australia is in an especially parlous position, given the nuclear prohibition and the lack of any available “extension cord” to nearby countries with more abundant energy resources. This accords with the regime’s goal of moving to a dual-circulation economic model, which aims to minimize China’s dependence on the outside world while maximizing the latter’s reliance on China for essential items.

How well is the West prepared to meet this challenge, in light of the self-deprecating cultural revolution that has swept through most of the democracies, undermining their civilizational self-confidence by inducing what some writers have described as a “tyranny of guilt”, especially among the educated classes?