There is a scene in Oppenheimer, Christopher Nolan’s brilliant new film about the head of the scientific team that developed the first atomic bomb during World War II, that depicts an exchange between J. Robert Oppenheimer and Colonel (later General) Leslie Groves, the military officer in overall charge of the project.

The film dialogue goes as follows:

Groves: “They are we saying there’s a chance that when we push that button, we destroy the world?”

Oppenheimer: “The chances are near zero”

Groves: “Near zero?”

Oppenheimer: “What do you want, from theory alone?”

Groves: “Zero would be nice!”

Nolan is engaging in a bit of cinematic license here. There is no evidence that this specific dialogue occurred.

There was, however, genuine concern among Oppenheimer and some of the other scientists, especially Edward Teller who was key to the later development of the hydrogen bomb, that the extreme heat and conditions created by detonating a fission bomb could trigger a chain of fusion reactions in the earth’s atmosphere and oceans that would destroy humanity, and possibly all life on earth.

The first public disclosure of these concerns came in a 1959 interview by the American writer Pearl S. Buck with Arthur Compton, a Nobel laureate physicist and Oppenheimer’s immediate boss during the bomb development. In the interview Compton described a discussion he had with Oppenheimer in the leadup to the first detonation. The Compton-Buck interview included this exchange:

Compton: “Hydrogen nuclei are unstable, and they can combine into helium nuclei with a large release of energy, as they do on the sun. To set off such a reaction would require a very high temperature, but might not the enormously high temperature of the atomic bomb be just what was needed to explode hydrogen?”

“And if hydrogen, what about hydrogen in sea water? Might not the explosion of the atomic bomb set off an explosion of the ocean itself? Nor was this all that Oppenheimer feared. The nitrogen in the air is also unstable, though in less degree. Might not it, too, be set off by an atomic explosion in the atmosphere?”

Buck: “The earth would be vaporized?”

Compton: “Exactly. It would be the ultimate catastrophe. Better to accept the slavery of the Nazis than to run the chance of drawing the final curtain on mankind!

Later in the interview, Compton related the criterion the scientists used to decide whether the explosion should proceed:

Compton: “If, after calculation it were proved that the chances were more than approximately three in one million that the earth would be vaporized by the atomic explosion, he would not proceed with the project. Calculation proved the figures slightly less – and the project continued.”

Three in one million? That is roughly the same order of magnitude as the risk of dying in an accident when you take a scheduled airline flight, and that makes plenty of us nervous. Yet the stakes were unimaginably higher—the survival of humanity, and of untold future generations, maybe trillions of people.

Oppenheimer and his colleagues were grappling with an ethical dilemma of a kind never before faced by humanity, one in which the stakes were literally existential for our species. Talk of existential risk is a common figure in public debate these days, especially in relation to climate change. But even Michael Mann, one of the most zealous scientific campaigners for climate action, concedes “there is no evidence of climate change scenarios that would render human beings extinct.”

First order existential dangers have always been around, from purely natural sources, mainly cosmic events like the remote chance of a world-destroying collision with a large asteroid or comet. But the odds of such an event occurring in any given year are miniscule. And in any case, until very recently there was absolutely nothing that could be done to prevent them.

The bomb conundrum was the first time anyone had to contemplate an anthropogenic (human-created) risk of this magnitude – but it almost certainly will not be the last given the proliferation of potentially dangerous (as well as beneficial) new technologies, most recently artificial intelligence (AI).

How do you decide, when facing a possibility like this, however remote? Was Groves right to insist on zero risk (well, he said it would be nice). Moreover, as posed by Oppenheimer in the fictional dialogue, what level of reassurance can “theory” possibly provide?

How do you go from miniscule to absolute zero, given the propensity of even the most talented scientists to make modelling errors, amply demonstrated by the history of nuclear weapons development?

In the mid-1970s there was an exchange on this issue in the pages of the Bulletin of the Atomic Scientists. This journal was founded after the war by Albert Einstein and a number of the scientists involved in the Manhattan Project who wanted to inform the public about the dangers of a nuclear arms race.

One of the contributors was Hans A. Bethe, who headed the Theoretical Division at Los Alamos and had a key role in designing the implosion mechanism used in the plutonium bombs used at the Trinity test and the Nagasaki bombing.

In the June 1976 edition of the Bulletin, Bethe ridiculed the concerns about a world-ending catastrophe, insisting that:

… there was never any possibility of causing a thermonuclear chain reaction in the atmosphere. There was never "probability of slightly less than three parts in a million" as Dudley claimed. Ignition is not a matter of probabilities; it is simply impossible.

This is dishonest. The Dudley mentioned was a radiation physicist who kicked off the Bulletin debate and was not involved in the Manhattan project. However, it was Arthur Compton, not Dudley, who made the claim cited, as the Pearl Buck interview makes clear.

Bethe based his assertion that there was zero risk on the analysis in a paper done by the bomb scientists before the Trinity detonation. The paper was circulated within the government in August 1946, and declassified in 1973. It has since been posted on the public web.

The paper is highly technical, but you do not have to be a physicist to grasp the implications of its conclusion, which reads:

One may conclude that the arguments of this paper make it unreasonable to expect that the N + N (nitrogen) reaction could propagate. An unlimited propagation is even less likely. However, the complexity of the argument and the absence of satisfactory experimental foundations make further work on the subject highly desirable.

Unlikely, but not zero, as claimed by Bethe.

But note the second sentence, which bespeaks the scientists’ lack of confidence in their analysis, acknowledging that “further work” was highly desirable. But not before the bomb was detonated, apparently!

Here is the problem: scientists make mistakes, not just calculation errors, but theoretical misconceptions in their modelling of what is going to occur, especially with experiments that create new and extreme conditions. The development of nuclear weapons provides two confirmations of this fallibility.

The first concerns the wartime German atomic bomb program, which was able to draw on some of the most talented physicists in the world, including the pioneer of quantum theory Werner Heisenberg, who was the scientific head of the program. Fear of a Nazi bomb was a huge motivating factor for Oppenheimer and other Manhattan Project scientists.

Yet, the Germans never came close to getting atomic weapons, and by 1942 had all but abandoned any serious attempt to develop them. After the war, some of the German scientists, including Heisenberg, tried to claim they had deliberately sabotaged the German program.

This was refuted by secret recordings of the scientists while in British captivity at a country estate called Farm Hall. The truth was that Heisenberg made an error calculating the critical mass of enriched uranium needed to produce an explosion, based on an incorrect understanding of how the fission process spreads in a chain reaction.

Heisenberg estimated that thirteen tons of enriched uranium would be needed, an impossible industrial challenge, making a practical bomb all but impossible. So confident was Heisenberg in his calculations that he initially insisted reports of the Hiroshima bombing were fake allied propaganda. But he was wrong—the Los Alamos scientists correctly calculated the critical mass at around 60 kg.

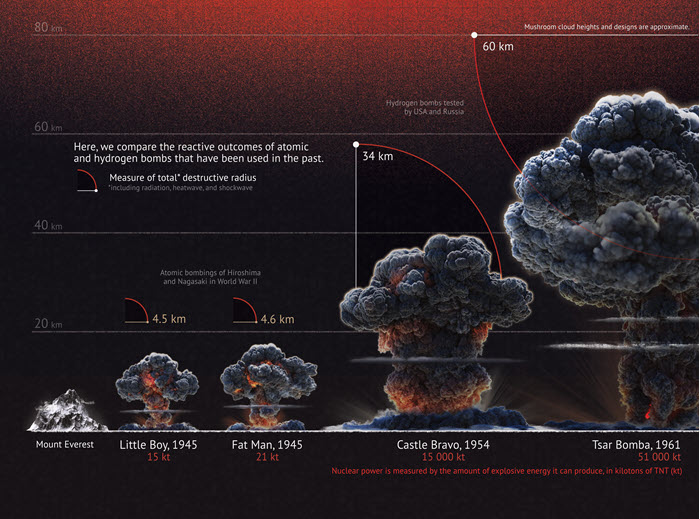

In the post-war period, there was a race to develop an even more powerful weapon, the hydrogen bomb, that utilizes the release of energy when two isotopes of hydrogen are fused together, producing an explosive yield dwarfing that of fission bombs. The first test in 1952 required the use of liquid hydrogen, making it impractical as a weapon.

To address this, the scientists came up with a new design in which a hydrogen isotope (deuterium) was combined with lithium to form a solid, lithium deuteride, that could be carried in an aircraft.

This was detonated in the Castle Bravo test in the South Pacific in March 1954. Castle Bravo produced a far larger explosion than the scientists had calculated, terrifying observers as the fireball grew larger and larger, with a yield of 15 megatons compared to the estimate of 5-6 megatons.

So, what happened? There was an unexpected reaction involving an isotope of lithium that the scientists thought would be inert. Whoops!

Since then, the process of physics experimentation has continued, with new existential concerns being raised from time to time, as occurred during the leadup to the opening of CERN’s Large Hadron Collider (LHC) in October 2008.

The British astrophysicist and former Astronomer Royal Martin Rees described the worries of some physicists about the LHC in his book On the Future: Prospects for Humanity (2021). They included the possible creation of stable black holes that might consume the earth, or the appearance of theorized cosmological objects called strangelets that could transform the Earth into a hyperdense sphere about a hundred metres across, or even a catastrophe that causes a rip in spacetime.

The worries about the safety of the LHC were taken seriously enough for CERN to commission a detailed investigation of these concerns, which all provided assurances that the fears are unwarranted. But the conundrum remains. For example, the report drew on an estimate made by the Brookhaven National Laboratory in the US that put the probability of a strangelet catastrophe at about one in 50 million.

Which doesn’t sound too bad—for anything other than a first-order existential threat that risked bringing down the curtain on the human story. Moreover, there remains the concern that the estimates may be wrong because of theoretical misconceptions, especially with physics experiments that create unprecedented extreme conditions. According to Rees:

If our understanding is shaky—as it plainly is at the frontiers of physics—we can’t really assign a probability, or confidently assert that something is unlikely. It’s presumptuous to place confidence in any theories about what happens when atoms are smashed together with unprecedented energy.

So, we might say, with General Groves in the movie dialogue, zero probability of an existential catastrophe would be nice, but seems unobtainable. So, should we stop all risky experimentation that creates extreme, or unprecedented conditions, in physics and other fields, not least molecular biology with its risk of lethal pandemics?

That would mean denying humanity the benefits that powerful new technologies might bring, including an increased ability to avoid existential risks.

Take, for example, the danger posed by an asteroid capable of completely destroying human civilization. According to NASA's Center for Near-Earth Object Studies (CNEOS), the chance of a civilization-ending impact event in any given year is about 1 in 500,000. Very low probability, but potentially very high, even species-extinguishing, in its effect.

Until very recently, there was literally nothing humanity could do to avoid such an eventuality. Modern technology—including nuclear weapons and rocketry—has changed that situation. According to a NASA study “nuclear standoff explosions were likely to be the most effective method for diverting an approaching near-Earth object”.

The debate about existential risk has taken on a new urgency with the spectacular acceleration of progress on artificial intelligence (AI) over the past several years, a development that has well-and-truly entered the public consciousness with the release of ChatGPT and other Large Language Model (LLM) applications since November 2022.

AI is expected to have all manner of impacts, for good or ill, but the existential fears have arisen from the sense that we could be much closer to artificial general intelligence (AGI), the ability to match or exceed human capabilities across a wide range of activities and knowledge domains, than previously thought.

What happens if an AGI emerges that is assigned, or develops on its own, goals incompatible with human values? What if such an AGI were to embark on a process of recursive self-improvement, rewriting its own code, producing an intelligence not just greater than any individual human, but the collective intelligence of humanity, making the leap from intelligence to super-intelligence?

Our continued existence, or at least the terms on which we are allowed to continue, would be at the discretion of an entity beyond our comprehension that may have values very different to our own. Those in the field term this the “unaligned AI” problem, and some analysts of existential risk believe it is that greatest threat we face.

Until recently, this would have seemed like a science fiction scenario, something that might arise centuries, or at least many decades, in the future. Now, a large and growing preponderance of opinion among leading developers and specialists in the field is that this is a scenario that we could face much earlier and need to be preparing for now.

These concerns have prompted calls for a pause, or even a permanent halt, in advanced AI developments. In May 2023, a veritable who’s-who of the AI world, including representatives of just about all of the major Western corporations working in the field (including Google-DeepMind, Microsoft, OpenAI, Meta, Anthropic) signed a one sentence statement that reads:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

What a pity, then, that this challenge has coincided with the worst deterioration of inter-state relations since the height of the Cold War as a coalition of dictatorships dominated by the CCP regime in China seek to rewrite the rules underpinning the international order and create a world “safe for autocracy”.

In 2017 the CCP embarked on an aggressive program to achieve AI supremacy by 2030, a chilling prospect given the applications of this technology the regime has decided to prioritize, including developing systems of surveillance and control that could make the emergence of organized dissent virtually impossible—with the unfortunate Uyghur people in Xinjiang serving as a testbed for the most intrusive developments.

This enormously complicates the chances of a credible, verifiable international agreement to minimize the dangers from dangerous AI. In 2021 a commission chaired by former Google CEO Eric Schmidt warned that if the United States unilaterally placed guardrails around AI it could surrender AI leadership.

Just as with the 1940s atomic bomb program, the impetus to develop a powerful and dangerous new technology is driven by the prospect that a malign opponent might steal the march, leading to a future world dominated by a technology-empowered totalitarian hegemon. A chilling prospect if those who argue artificial general intelligence could be imminent turn out to be right.